The Role of LIDAR vs. Camera Systems in Self-Driving Cars

21 January 2026

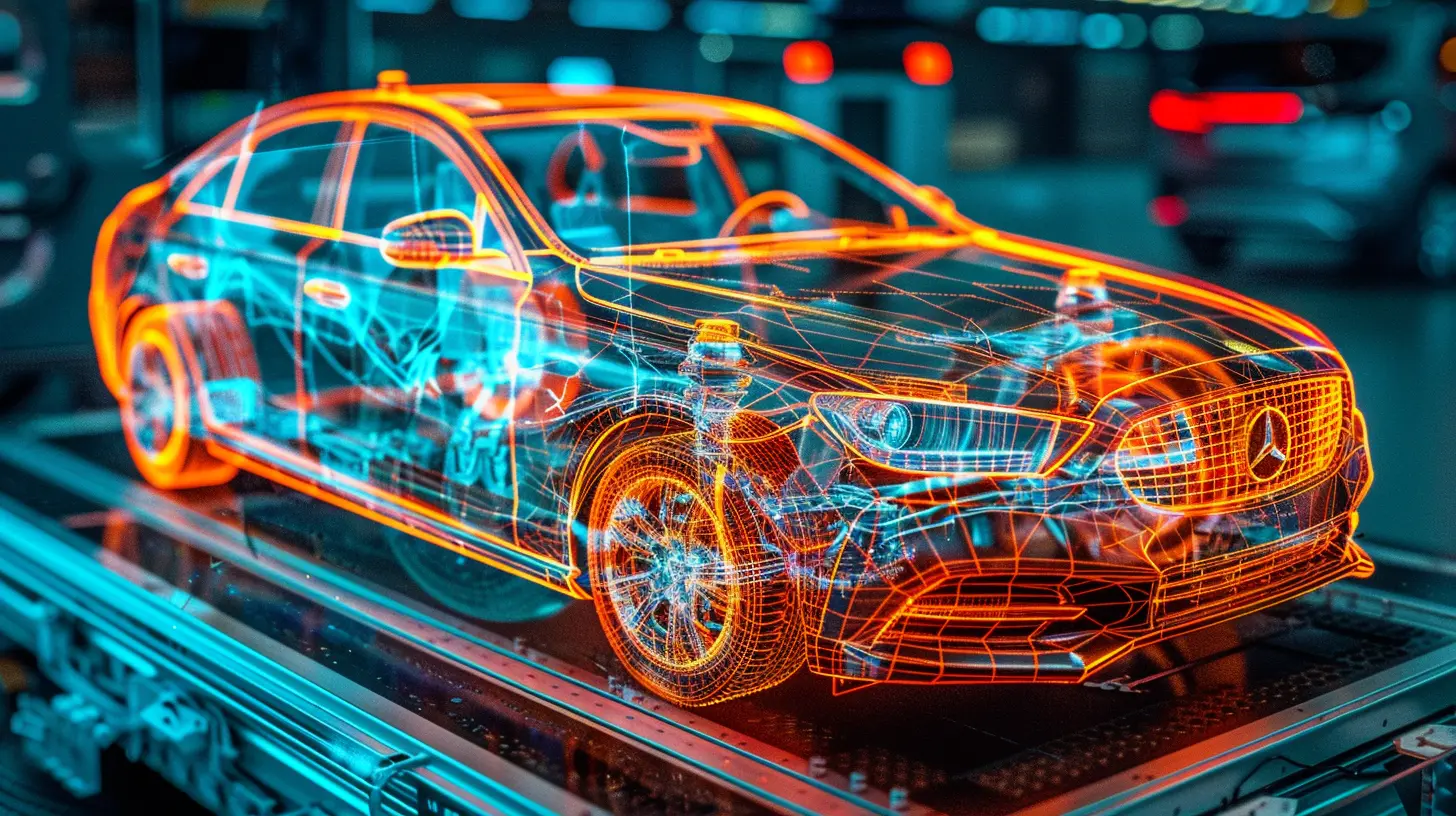

Self-driving cars are no longer a distant dream—they’re cruising our roads, learning from every lane change and stop sign. But have you ever wondered what lets these futuristic vehicles “see” the road? Two key technologies steal the spotlight: LIDAR and camera systems. These two play vital roles in how autonomous cars perceive their environment. But which one is better? Or, do they need each other to function at their best?

In this article, we’ll unpack the key differences, strengths, and trade-offs between LIDAR and camera systems in the self-driving world. Buckle up—we're going for a deep dive.

What Exactly Is LIDAR?

LIDAR stands for Light Detection and Ranging. Imagine a bat sending out sound waves and then listening for the echo to know where things are. LIDAR does something similar, but instead of echolocation, it shoots out millions of laser pulses every second and measures the time they take to bounce back. Sounds cool, right?This light-based radar builds a precise 3D map of the environment around the vehicle. It picks up everything—cars, cyclists, trees, you name it—with pinpoint accuracy.

How Does LIDAR Work?

Let’s break it down:- A LIDAR sensor emits laser beams in quick succession.

- These beams hit objects and bounce back to the sensor.

- The return time helps calculate the exact distance of each object.

- These data points create a detailed point cloud map.

So, LIDAR is like giving your car a pair of laser eyes that can detect how far away everything is in 360 degrees. Super sci-fi, right?

What Are Camera Systems?

Now let’s talk about cameras. These systems use traditional optical cameras—kinda like the one on your phone, just better. They capture images and feed them to powerful onboard computers which use artificial intelligence to identify and understand what’s in the scene: road signs, pedestrians, traffic lights, lane markings—you name it.How Do Cameras Help?

Here’s the magic:- Cameras capture visual images of the car’s surroundings.

- AI and machine learning algorithms process these images.

- The system identifies objects in real time—think stop signs, lane lines, brake lights.

- Cameras provide rich color and detail that LIDAR just can’t.

So, if LIDAR gives the car a skeleton map, cameras fill in the details—like painting the bones with color and clothes.

LIDAR vs. Camera: A Head-to-Head Comparison

Let’s put these two head-to-head. Here’s what matters most when it comes to autonomous driving:| Feature | LIDAR | Camera Systems |

|--------|-------|----------------|

| Depth Perception | Excellent (measures distance directly) | Inferior (estimates distance from 2D images) |

| Color & Detail | Poor (doesn’t detect color) | Excellent (rich visuals and fine detail) |

| Performance in Poor Lighting | Great (works day or night) | Challenging (struggles in low light or glare) |

| Object Recognition | Moderate (based on shape/movement) | Superior (trained on vast image datasets) |

| Weather Sensitivity | Affected by fog, rain, dust | Affected by bright light, darkness, precipitation |

| Cost | Expensive | Relatively Cheap |

| Processing Power Needed | High | Moderate to High |

The Case for LIDAR

LIDAR fans argue that when it comes to accuracy, nothing beats laser beams. It excels in giving self-driving cars a geometric understanding of the world. Here’s why some engineers swear by it:- 360-Degree Awareness: LIDAR provides a full field of view around the car.

- Precision: Measures exact distance to obstacles within centimeters.

- Independence from Light: It works just as well in the dark.

But it’s not all rosy. LIDAR has some issues worth mentioning. It’s pricey—some systems can cost thousands of dollars per unit. Also, it doesn’t capture visual details like color, meaning it can’t tell a stop sign from a yield sign by appearance alone.

The Case for Cameras

Cameras are the eyes of the car. And like our own eyes, they see the rich visual details necessary for understanding the road.- Recognizing Traffic Signs: Cameras can read written text, logos, and colors.

- Understanding Context: A camera can tell the difference between a police officer and a pedestrian based on outfits and gestures.

- Lower Cost: Much more affordable to implement across a fleet of vehicles.

However, distance estimation with cameras is tricky. It relies on depth inference, which isn’t always reliable—especially in new or unpredictable environments. Plus, performance drops in bad weather or at night.

Tesla’s Take: Camera-Only Approach

Elon Musk and Tesla took a bold stance: ditch LIDAR altogether. Tesla’s Full Self-Driving (FSD) system relies purely on cameras and neural networks. Musk argues that humans drive just fine using only their eyes, so why should cars need lasers?Tesla’s camera-only system uses “vision-based” AI that mimics human perception. It’s elegant in concept and definitely cuts costs, but critics worry about its limitations in edge cases—like foggy roads or sudden obstacles.

Waymo’s Strategy: LIDAR + Cameras

On the flip side, Google’s self-driving arm, Waymo, believes in a sensor fusion model. They use LIDAR, cameras, AND radar. Why? Because redundancy means safety. If one sensor fails, another can back it up.Their approach is kind of like dressing your vehicle in layers: LIDAR gives shape, cameras add detail, radar senses motion—altogether giving the car a full sensory toolkit.

Sensor Fusion: Best of Both Worlds?

In practice, most autonomous driving systems today use a mix of sensors—a concept known as sensor fusion. It makes sense. LIDAR tells you where things are. Cameras tell you what they are. When you combine them, the vehicle gains a solid understanding of its surroundings—both geometrically and visually.Sensor fusion overcomes the weaknesses of any single system:

- Bad lighting? LIDAR steps up when cameras struggle.

- Bad weather? Radar can lend a hand.

- Need to read a traffic sign? Cameras to the rescue.

No one sensor is king. Together, they form a reliable team.

Can Cameras Replace LIDAR?

This is the billion-dollar question. Some believe that as AI improves, camera-only systems will get so smart they won’t need LIDAR. Software will make up for hardware shortcomings—in theory.But that’s a big leap of faith. Cameras still struggle with distance estimation and low-light conditions. For now, LIDAR offers an extra layer of safety that’s hard to ignore.

Cost vs. Safety: The Ongoing Debate

Let’s talk numbers. LIDAR used to cost tens of thousands of dollars—too steep for mass-market vehicles. But prices are dropping thanks to new manufacturing techniques and competition. Some LIDAR units now cost under $500.Cameras have always been cheaper, and that’s why they’re appealing for wide-scale adoption. But is saving money worth sacrificing safety features? That’s what automakers have to ask themselves. For luxury autonomous cars, adding LIDAR may be a no-brainer. For budget models, it might be a tough call.

The Road Ahead: What’s the Future?

The future likely lies in smarter, cheaper, and more efficient sensor fusion systems. As AI and hardware continue to evolve, we’ll see better integration of LIDAR, radar, and cameras into sleek, reliable packages.And let’s not forget regulatory standards. Governments may mandate certain sensor minimums for safety, which would guide the mix of technologies used.

In the end, it's not about “LIDAR vs. camera” but about how both can work together. Think of them not as rivals, but as teammates passing the baton to get us safely to our destinations.

Final Thoughts

So, who wins in the battle of LIDAR vs. camera systems? The truth is—there’s no clear winner right now. Each brings unique strengths to the table. LIDAR shines in precision and range. Cameras excel in context and recognition.If you want a truly safe self-driving car, it needs to “see” the world in as many ways as possible. That’s where sensor fusion steps in. By combining LIDAR, cameras, and even radar, we give cars a multidimensional understanding of the world.

Driving is hard—even for humans. So, let’s equip our robot chauffeurs with the best set of eyes we can.

all images in this post were generated using AI tools

Category:

Autonomous VehiclesAuthor:

John Peterson

Discussion

rate this article

1 comments

Merida Morris

While LIDAR might be the brainiac on the road, cameras are the selfie enthusiasts—both just hoping to avoid a crash!

January 22, 2026 at 3:55 AM